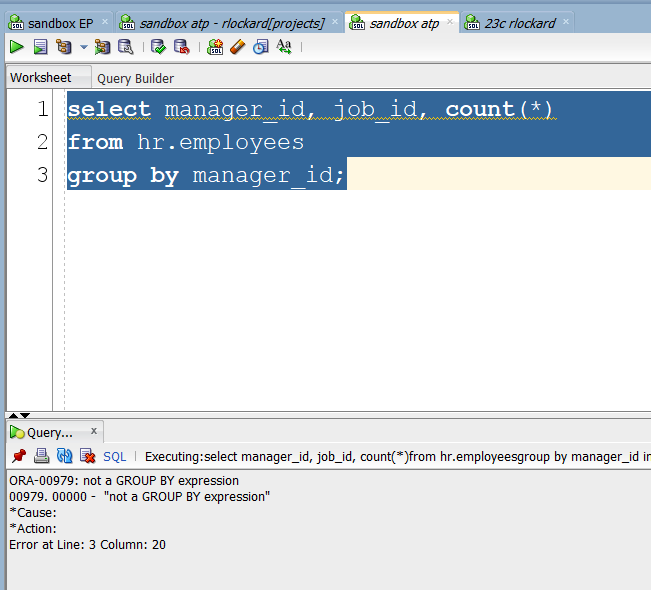

#Old Error Message

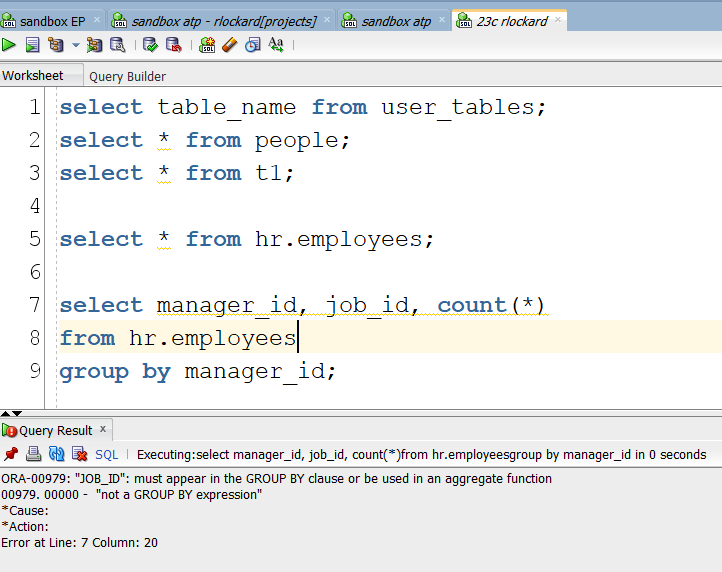

#New Error Message

Well, that makes it easier. I believe this will help people new to writing sql, I remember quite well when I was first learning, I would struggle understanding the error messages.

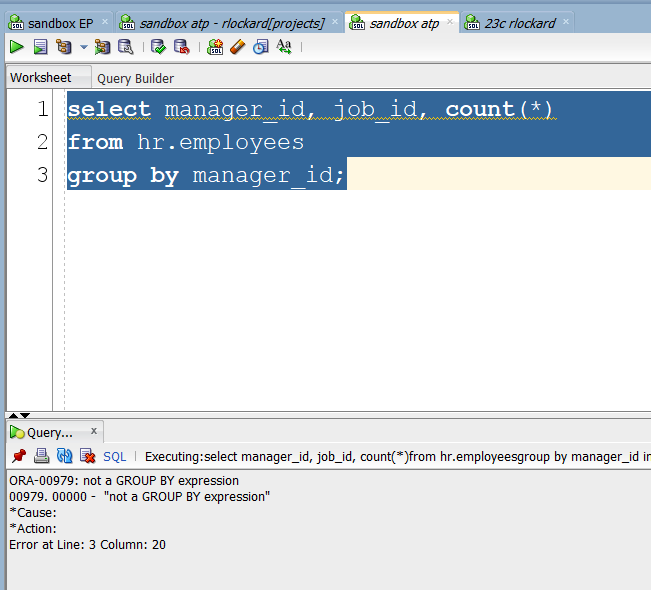

#Old Error Message

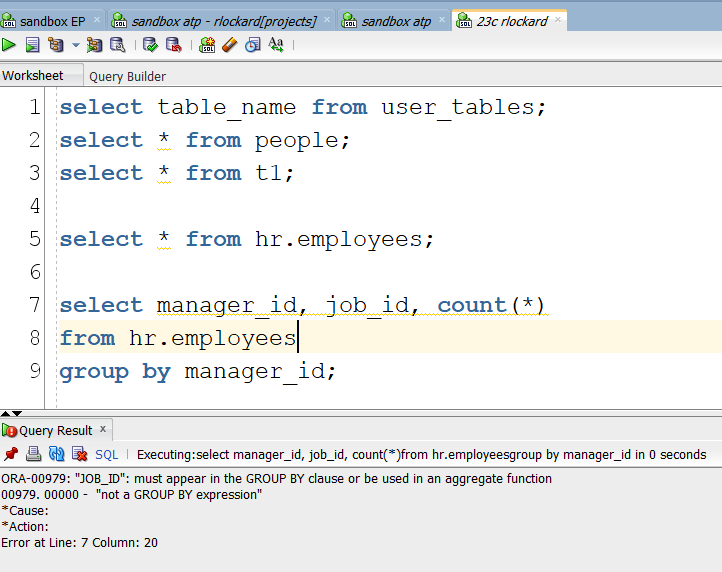

#New Error Message

Well, that makes it easier. I believe this will help people new to writing sql, I remember quite well when I was first learning, I would struggle understanding the error messages.

We’ve been using the dual table to return information on functions for longer than I care to think about. Now, in 23c you don’t need to include “from dual” in your code.

-- cold way

Selecting an expression without FROM DUAL

SQL> select sysdate, user from dual;

SYSDATE USER

____________ ___________

19-MAY-23 RLOCKARD

-- 23c new way.

Selecting an expression without FROM DUAL

SQL> select sysdate, user;

SYSDATE USER

____________ ___________

19-MAY-23 RLOCKARD

I love working from the command line. Now, don’t get me wrong, I spend quite a bit of time in #sqldeveloper and love it.

Quite a few of my scripts reside on my laptop where I can use my editor of choice to write them. Because of this, I normally run my scripts straight out of sqlcl. It’s quite simple.

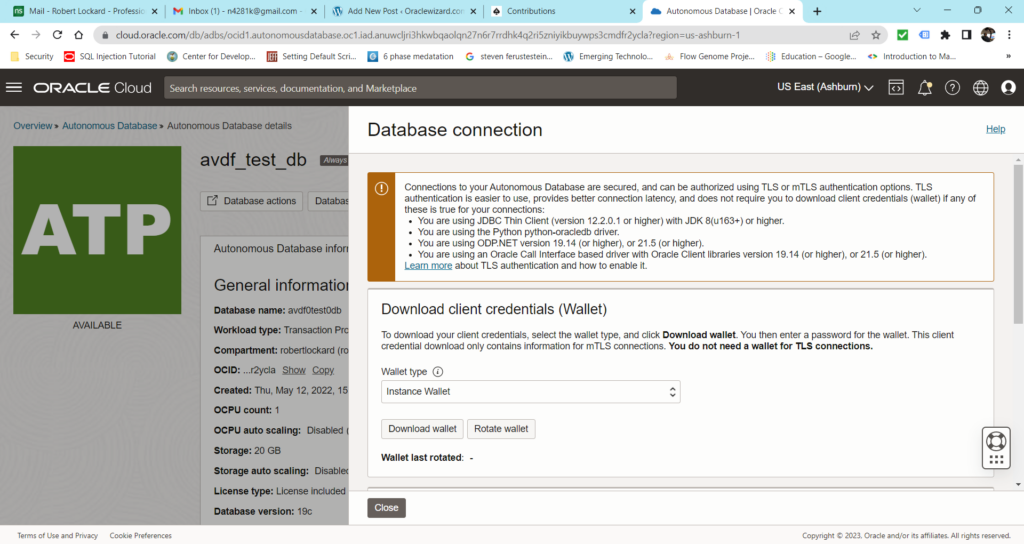

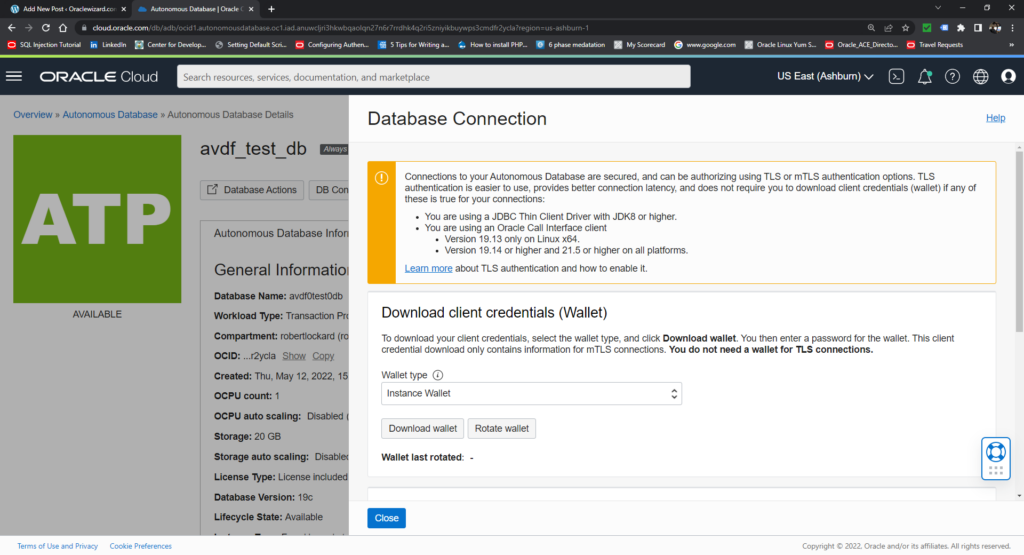

First off, you’ll need to download the wallet from oci.

You’ll need to enter a password for you wallet and download it.

Copy the wallet to a location of your choice.

Start up sqlcl, set cloudconfig to point to the wallet you just downloaded.

sql

set echo on

set cloudconfig C:\work\owi-database-wallets\avdf_test_db.zip

conn rlockard[projects]@avdf0test0db_highWhen you have developers, sometimes it’s challenging to determine just what roles they need. If you’re not careful, you’ll over privilege an account and just as anoying you may wind up under privilege an account and need to go back and keep adjusting it until you get it right.

In Oracle 23c we now have the DB_DEVELOPER_ROLE that will give developers all the privileges they need.

In the following example, we’re going to create a user test1 and grant they DB_DEVELOPER_ROLE and see just what all they got.

SQL> create user test1 identified by test1;

User TEST1 created.

SQL> grant unlimited tablespace to test1;

Grant succeeded.

SQL> grant db_developer_role to test1;

Grant succeeded.

SQL> conn test1/test1

Connected.

SQL> select granted_role from user_Role_privs;

GRANTED_ROLE

____________________

DB_DEVELOPER_ROLE

SQL> SELECT PRIVILEGE FROM ROLE_SYS_PRIVS WHERE ROLE='DB_DEVELOPER_ROLE';

PRIVILEGE

_____________________________

CREATE DOMAIN

CREATE MLE

CREATE ANALYTIC VIEW

CREATE HIERARCHY

CREATE ATTRIBUTE DIMENSION

EXECUTE DYNAMIC MLE

CREATE CUBE BUILD PROCESS

CREATE CUBE

CREATE CUBE DIMENSION

CREATE MINING MODEL

CREATE JOB

DEBUG CONNECT SESSION

ON COMMIT REFRESH

CREATE DIMENSION

CREATE TYPE

CREATE MATERIALIZED VIEW

CREATE TRIGGER

CREATE PROCEDURE

FORCE TRANSACTION

CREATE SEQUENCE

CREATE VIEW

CREATE SYNONYM

CREATE TABLE

CREATE SESSION

24 rows selected.

SQL> SELECT GRANTED_ROLE FROM ROLE_ROLE_PRIVS WHERE ROLE='DB_DEVELOPER_ROLE';

GRANTED_ROLE

_______________

SODA_APP

CTXAPP

SQL> SELECT TABLE_NAME FROM ROLE_TAB_PRIVS WHERE ROLE='DB_DEVELOPER_ROLE';

TABLE_NAME

___________________________

JAVASCRIPT

DBA_PENDING_TRANSACTIONS

V_$STATNAME

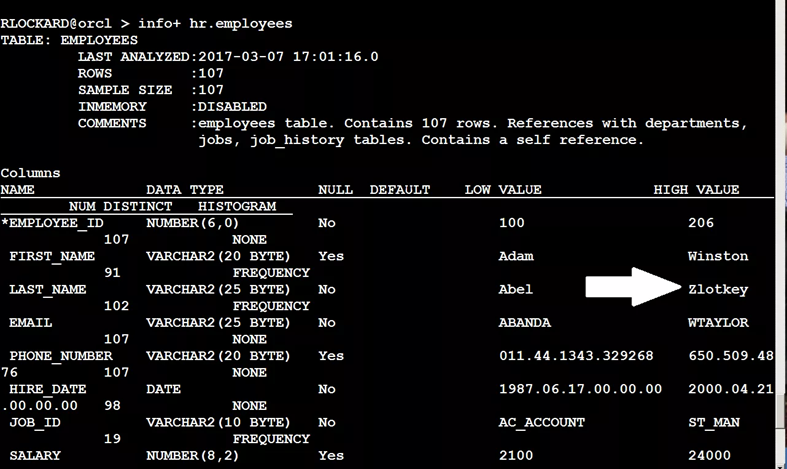

V_$PARAMETERWhen coming into a new environment and trying to learn a bit about the data my customer has; one of the tools I use is to grab histograms of key data. Now in sqlcl and sql developer you have the info+ command that will display histograms; however, if the optimizer did not generate histograms, the information provided will be of limited usefulness (in the sense of histograms)

A histogram will give you information on how the data is distributed, with text data, this is fairly easy using select column;, count(*) from t1 group by column1;

Dates and numbers, well that’s another story.

First I need to load up a table with some test data. updt_stat simply takes the order date and returns a status. This is to simulate and order entry system.

create sequence t1_seq;

create table t1 (

id number primary key,

order_date date not null,

col1 number not null,

status varchar2(25) not null);

alter table t1 modify id default t1_seq.nextval;

create or replace function updt_stat (pdate in date) return varchar2 as

value varchar2(10);

tmp number;

begin

tmp := dbms_random.value(0,10);

case

when pdate > sysdate - 50 and tmp > 9

then value := 'Canceled';

when pdate <= sysdate - 50

then value := 'Shipped';

when pdate >= sysdate - 50 and pdate < sysdate - 1

then value := 'Picked';

when pdate >= sysdate

then value := 'Ordered';

else

value := 'Back Order';

end case;

return value;

end;

/Now populate the table with some test data.

declare

--create or replace procedure simulate_order_entry as

i number; -- an index variable

sdate date; -- the date we are going to insert.

cursor c1 is

select dbms_random.value(0,10000) col1

from dual

connect by level <=36500;

begin

-- one year of data

select sysdate-365 into sdate from dual;

i := 1;

-- start the loop, 36,500 times

-- one year of data

for rec in c1

loop

if ( i / 100 = 1 ) then

i := 1;

sdate := sdate + 1;

end if;

insert into t1 (order_date, col1, status)

values

(sdate, rec.col1, updt_stat(sdate));

i := i+1; -- i++

end loop;

commit;

end;

/SQL> select status, count(*)

2 from t1

3* group by status;

STATUS COUNT(*)

_____________ ___________

Shipped 31284

Picked 4334

Canceled 553

Back Order 92

Ordered 237SQL> select min(order_date),

2 max(order_date),

3 width_bucket(order_date, sysdate-365, sysdate+20, 12) bucket,

4 count(*)

5 from t1

6 group by width_bucket(order_date, sysdate-365, sysdate+20, 12)

7* order by 1;

MIN(ORDER_DATE) MAX(ORDER_DATE) BUCKET COUNT(*)

__________________ __________________ _________ ___________

16-MAY-22 16-MAY-22 0 99

17-MAY-22 17-JUN-22 1 3168

18-JUN-22 19-JUL-22 2 3168

20-JUL-22 20-AUG-22 3 3168

21-AUG-22 21-SEP-22 4 3168

22-SEP-22 23-OCT-22 5 3168

24-OCT-22 24-NOV-22 6 3168

25-NOV-22 26-DEC-22 7 3168

27-DEC-22 27-JAN-23 8 3168

28-JAN-23 28-FEB-23 9 3168

01-MAR-23 02-APR-23 10 3267

03-APR-23 04-MAY-23 11 3168

05-MAY-23 19-MAY-23 12 1454SQL> select trunc(min(col1),4),

2 trunc(max(col1),4),

3 width_bucket(col1, 0, 10000, 10) bucket,

4 count(*)

5 from t1

6 group by width_bucket(col1, 0, 10000, 10)

7* order by 1;

TRUNC(MIN(COL1),4) TRUNC(MAX(COL1),4) BUCKET COUNT(*)

_____________________ _____________________ _________ ___________

0.1578 999.9308 1 3710

1000.0824 1999.9868 2 3543

2001.0208 2999.6238 3 3640

3000.4055 3999.8725 4 3692

4000.2374 4999.823 5 3751

5000.1659 5999.6815 6 3767

6000.3364 6999.9993 7 3660

7000.1511 7999.4147 8 3471

8000.284 8999.706 9 3651

9000.1652 9999.5417 10 3615There has been something that’s bothered me for a long time, Oracle 23c addresses it. In the old days, a database could be copied over to another machine along with it’s auto-login wallet; and the auto-login wallet would work. In 23c the auto-login wallet is now tied to the hardware/virtual machine.

When you create a new wallet using orapki, it creates a version 7 wallet, and if you alter an existing version 6 wallet with orapki, it will be converted to a version 7 wallet.

Note, in Oracle 23c, the version 6 wallet is depreciated. This a big enhancement to the security of the wallet.

In the old days before Oracle 23c, you had two options when creating build scripts. 1) Take the error message such as ORA-00942 Table or View does not exists, or you could write pl/sql and trap the error like this.

declare

procedure Drop_Table(p_table in varchar2) is

Table_Doesnt_Exist exception;

pragma Exception_Init(Table_Doesnt_Exist, -00942);

begin

execute immediate 'drop table '||p_table;

exception when Table_Doesnt_Exist then null;

end Drop_Table;

begin

Drop_Table(‘t’);

end;

/

This worked well; however, as you can see your cleanup code could take up some space. So, your choice, accept the error message or write some code. Both worked. But now we have a better way, if Oracle 23c you now have the if exists and if not exists options. Life is good.

SQL> create table if not exists t (x number);

Table T created.

SQL> drop table if exists t;

Table T dropped.

In Oracle 23c we can now grant privileges on a schema to a user. Now, in previous versions of Oracle, you never wanted to grant with the “ANY” clause; however, now we can just apply that to a schema. In the bellow example, we’re granting select any table, and create any table on the HR schema to usr1,

SQL> GRANT SELECT ANY TABLE ON SCHEMA HR TO usr1;

Grant succeeded.

SQL> grant create any table on schema hr to usr1;

Grant succeeded.

This goes a long way to helping us manage privileges and also help enforce the principle of least privilege.

SQL> conn usr1/secret

Connected.

SQL> create table hr.t (x number);

Table HR.T created.

SQL> drop table hr.t;

Error starting at line : 1 in command –

drop table hr.t

Error report –

ORA-01031: insufficient privileges

But you’ll notice, because drop table was not granted, the user usr1 can not drop the table he/she just created.

“Welcome to Grey Beards #2 with Oracle ACE Director Craig Shallahamer, where we delve into the exciting world of Oracle technology and beyond! In this episode, we’re discussing the back story behind the sale of OraPub to Viscocity, the impact of AI and Machine Learning on the industry, and the exciting capabilities of Chat GPT (Generative Pre-Trained). We’ll also explore the concept of Constitutional AI and the importance of passion in one’s work. Our guest, Craig Shallahamer, shares his own career trajectory and shares his tips on making an impact in your work. Get ready for some war stories of when things went wrong and a discussion on career pivots vs major career rehosting. This episode is packed with insights and inspiration, don’t miss it!”

There are days when I honestly want to hang up my spurs and retire. To be honest, the problem is not with TDE; it’s with some people not understanding how TDE works.

There are a couple ways you can implement Oracle TDE, in this example; we’re going to be discussing Column Encryption and Tablespace Encryption. It’s important to understand what Oracle does in the background for encrypting and decrypting data for column and tablespace encryption.

When doing column encryption, every row/column goes through an encryption/decryption process. Therefore, if you do a full table scan on 100,000 rows that are encrypted, there will be 100,000 decrypt operations performed and if you’re updating 100,000 rows, there will be 100,000 decrypt and encrypt operations performed. So, you will be seeing a performance impact when doing column encryption.

Other down sides of doing column encryption is you can’t put foreign key restraints on encrypted columns. One of the attack vectors on encrypted columns is to do a statistical analysis on the data to determine what the plain text data is. To get around this, you add salt to the encryption; however, if you do that, then you can not index the encrypted column.

With column encryption, there is the advantage that all supporting objects (indexes, materialized views) columns will be encrypted. This is not true for tablespace encryption.

When doing tablespace encryption (recommended for most use cases) encryption / decryption operations are done at the block level. Therefore, if you have 100,000 rows and there are 200 rows per block, then there will be 500 decryption operations in a full table scan. And there you have it. By doing tablespace encryption, the number of encryption/decryption operations is reduced as compared to column encryption.

Now, the downside of tablespace encryption, if your indexes or materialized views are in a tablespace that is not encrypted, then your indexes or materialized views will not be encrypted. For this reason I always recommend encrypting all tablespaces in the database. (Commonly referred to full database encryption.)

Here are the results of some performance test I’ve done on column based vs. tablespace encryption. Each table has 500,000 rows. All times are in seconds.

| Table | Encryption | Insert Time | Update Time | Full Table scan | |

| T0 | No encryption | 3.75 | 3.508 | 0.785 | |

| T1 | Column Encryption | 29.5 | 90.02 | 16.621 | |

| T2 | Tablespace Encryption | 4.01 | 3.9 | 0.04 |

Here is my code if you’d like to duplicate my test.

set echo on

set timing on

set feedback on

-- cleanup.

drop table rlockard.t0;

drop table rlockard.t1;

drop table rlockard.t2;

drop sequence rlockard.t_seq;

-- check to see if the DATA tablespace is

-- encrypted. No, it's not encrypted, so we're

-- going to put our table with an encrypted column

-- in the DATA tablespace.

select encrypted from dba_tablespaces

where tablespace_name = 'DATA';

create table rlockard.t0 (id number primary key,

n1 number)

tablespace data;

-- we need a sequence for our primary key.

create sequence rlockard.t_seq;

insert into rlockard.t0 (

select rlockard.t_seq.nextval,

sys.dbms_random.value(0,50000)

from dual

connect by level <= 500000);

commit;

select avg(n1) from rlockard.t0;

update rlockard.t0

set n1 = n1*2

where mod(trunc(n1),2) = 0;

commit;

-- number of blocks used by t, where each element nbr is encrypted.

analyze table rlockard.t0 compute statistics;

select blocks - empty_blocks

from dba_tables

where table_name = 'T0'

and owner = 'RLOCKARD';

-- average number of rows in a block where each element is encrypted.

select avg(row_count) from (

select dbms_rowid.ROWID_BLOCK_NUMBER( rowid ), count(*) row_count

from rlockard.t0

group by dbms_rowid.ROWID_BLOCK_NUMBER( rowid ))

/

-- drop t0 to free up space.

drop table rlockard.t0;

-- create a test table in an unencrypted tablespace and use salt.

-- to keep things simple, we're not going to use salt in this

-- example. Just use simple aes256 encryption.

create table rlockard.t1 (id number primary key,

n1 number encrypt using 'aes256')

tablespace data;

-- populate the table with 500,000 rows.

insert into rlockard.t1 (

select rlockard.t_seq.nextval,

sys.dbms_random.value(0,50000)

from dual

connect by level <= 500000);

commit;

-- this will do a full tablescan and perform 500,000

-- decryption operations.

select avg(n1) from rlockard.t1;

-- will need to decrypt and then encrypt each n1. therefore,

-- there will be 500,000 encryption calculations and aprox

-- 250,000 encryption calculations.

update rlockard.t1

set n1 = n1*2

where mod(trunc(n1),2) = 0;

commit;

-- number of blocks used by t, where each element nbr is encrypted.

analyze table rlockard.t1 compute statistics;

select blocks - empty_blocks

from dba_tables

where table_name = 'T1'

and owner = 'RLOCKARD';

-- average number of rows in a block where each element is encrypted.

select avg(row_count) from (

select dbms_rowid.ROWID_BLOCK_NUMBER( rowid ), count(*) row_count

from rlockard.t1

group by dbms_rowid.ROWID_BLOCK_NUMBER( rowid ))

/

-- okay, so what happens when we access by primary key and it's not encrypted.

-- this will update 1/2 of the rows where the primary key is an even number.

-- 250,000 updates by primary key.

select encrypted from dba_tablespaces

where tablespace_name = 'USERS';

-- we're going to put t2 into an encrypted tablespace.

create table rlockard.t2 (id number primary key,

n1 number)

tablespace users;

-- insert 500,000 rows, each block will be encrypted. There will be

-- multiple rows stored in each block.

insert into rlockard.t2 (

select rlockard.t_seq.nextval,

sys.dbms_random.value(0,50000)

from dual

connect by level <= 500000);

commit;

-- a full table scan will be performed and

-- each block will be decrypted. We'll get

-- the number of decryption operations in a moment.

select avg(n1) from rlockard.t2;

-- decryption and decryption will happen at the block level.

-- logically mod(trucn(n1),2) = 0 should be evenly distrbuted

-- in the blocks, therefore will still have good peformance.

update rlockard.t2

set n1 = n1*2

where mod(trunc(n1),2) = 0;

commit;

-- number of blocks used by t2 in the encrypted tablespace. This will

-- give us the number of encryption operations.

analyze table rlockard.t2 compute statistics;

select blocks - empty_blocks

from dba_tables

where table_name = 'T2'

and owner = 'RLOCKARD';

-- average number of rows in a block used by t2 in the encrypted tablespace.

-- this will give us the number of rows decrypted in each encryption operation.

select avg(row_count) from (

select dbms_rowid.ROWID_BLOCK_NUMBER( rowid ), count(*) row_count

from rlockard.t2

group by dbms_rowid.ROWID_BLOCK_NUMBER( rowid ))

/We’ve come a long way from 10g XE to 23c Free Developers edition.

Roger Cormejo and I discuss tactics you can use to protect yourself and make yourself relevant.

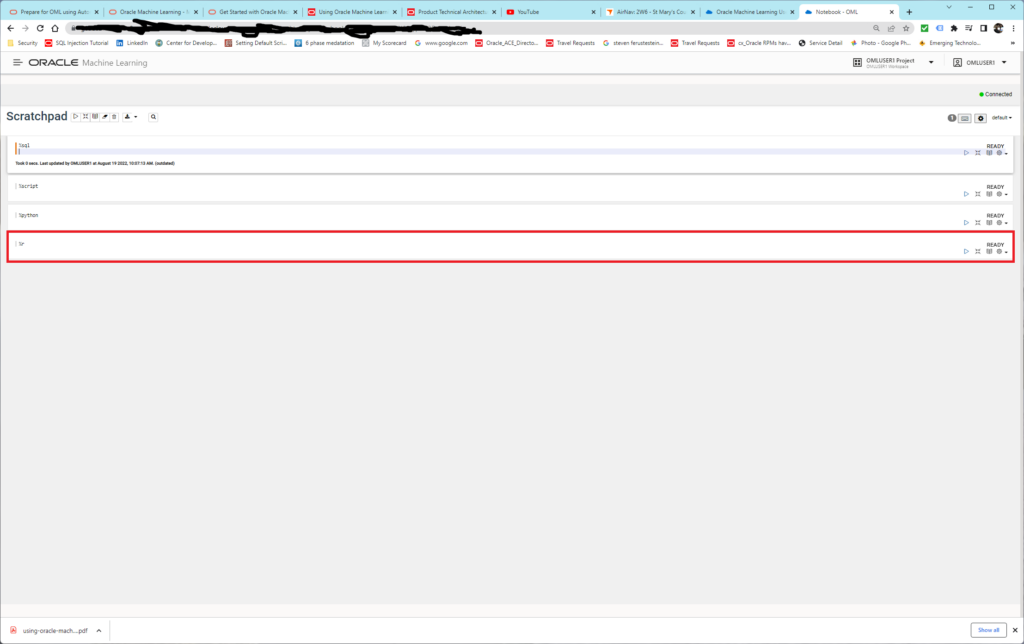

I’ve been working learning Oracle Machine Learning and noticed a new feature this morning. You now have the ability to run R scripts in scratchpad.

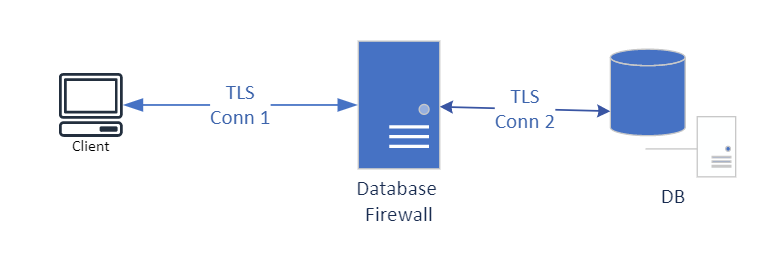

Here we’re just discussing Database Firewall!

Oracle AVDF 20.7 now supports TLS. Here I’m using TLS Conn 1 and TLS Conn 2 to represent two distint TLS connections. For database firewall to use this, the traffic must be decrypted at the database firewall to be analyzed. Then the database firewall acts as a client to the database and creates a new TLS connection.

NOTE: To keep this simple, I did not add in Audit Vault (required) and associated high availability instances.

Dude, it’s easy!

Download the wallet.

I will rename the zip file to something that makes sense to me. In this case avdf_test_db.zip (that’s because I’m using this database to test Audit Vault Database Firewall. See, it makes sense!

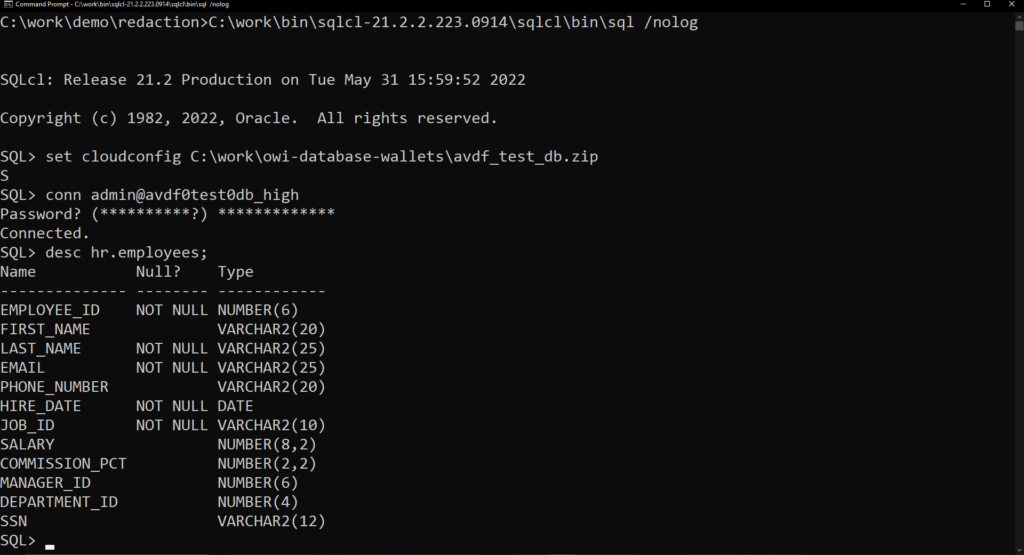

startup sqlcl using the /nolog option. Set cloudconfig, then execute your connection.

C:\work\bin\sqlcl-21.2.2.223.0914\sqlcl\bin\sql /nolog

set cloudconfig C:\work\owi-database-wallets\avdf_test_db.zip

conn admin@avdf0test0db_high

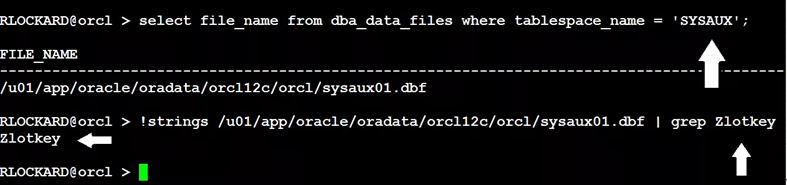

Statistics data is stored in the sysaux tablespace, if you’re not using full database encryption, then you have unencrypted data. In OCI, this is encrypted by default.

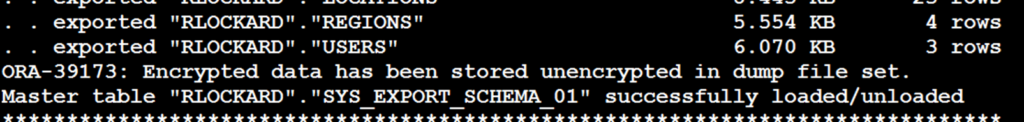

If you use datapump to get an export of your database, then please encrypt your exports. Even if you’re using TDE, this spillage can happen.

To fix this, use the encryption parameter on the command line. This will prevent data from inavertantly spilling.

--As a user with create role privileges and the ability to grant -- the required privileges to the role. ie: DBA conn rob_dba@demo create role <role>; grant <privilege> on <object owner>.<object name> to <role>; grant <role> to <package owner> with delegate option; conn <package owner>@<instance> grant <role> to package <package name>;

GRANTING THE ROLE TO THE PACKAGE OWNER WITH DELEGATE OPTION: This is the preferred method to grant a role to a package. It appears the same as #1; however we are granting the package owner the role with delegate option. Using this method, we can grant the package the required role, but the package owner can not grant the role outside of it’s schema. This is the least amount of privileges needed to execute the task.

After migrating your database to OCI; if you don’t need the data anymore on your local system(s), you should do a secure delete on all your local datafiles. If you are going to need the data again, then move the data to encrypted devices.

Do you see the problem with the following code fragment?

c##sec_admin > administer key management set keystore open identified by SecretPassword;

If your network is not encrypted, your password will be sent in the clear. This is part of the huge advantage of moving to OCI, network is encrypted by default.

Ya’ know, it’d be really nice if someone put together some baseline security standards for the Oracle database. Well, you can find those is a couple of places. These tools checklist are free to use. Now, I’m partial to the DISA STIGs; however, the CIS Benchmarks are really just as good.

Here are the DISA (Defence Information Systems Agency) STIGs. You can get these from a couple different sources: Here is NIST: https://ncp.nist.gov/checklist/667 and here is from cyber.mil https://public.cyber.mil/stigs/downloads/?_dl_facet_stigs=app-security then enter Oracle in the search box.

Now, there’s a reason I say “baseline,” ya’ see, even though this is a good place to start, there are things that are not covered or not covered very well. Once you’ve gone through the checklist, and secured your database, you’ll need to do testing to make sure you did not break anything, and you’ll should also look at enhancing these check list by using other tools and techniques. Some of the tools and techniquest you can look to include are: Code Based Access Control, AVDF (Audit Vault Database Firewall), Database Vault, Fine Grained Auditing, Real Application Security, and so much more.

Oracle AFDF can track the usage of sensitive data in your database, this includes, who can access, who has made changes, and access by privileged users. Improving your ability to keep an eye on what is happening with sensitive data.

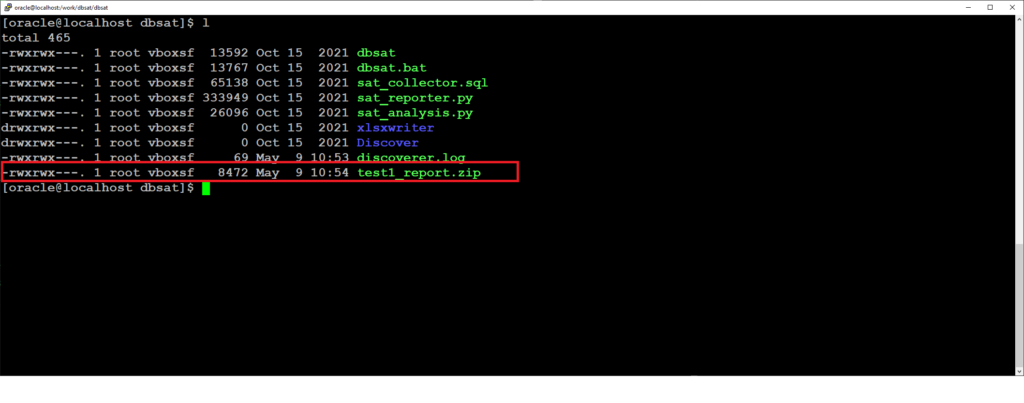

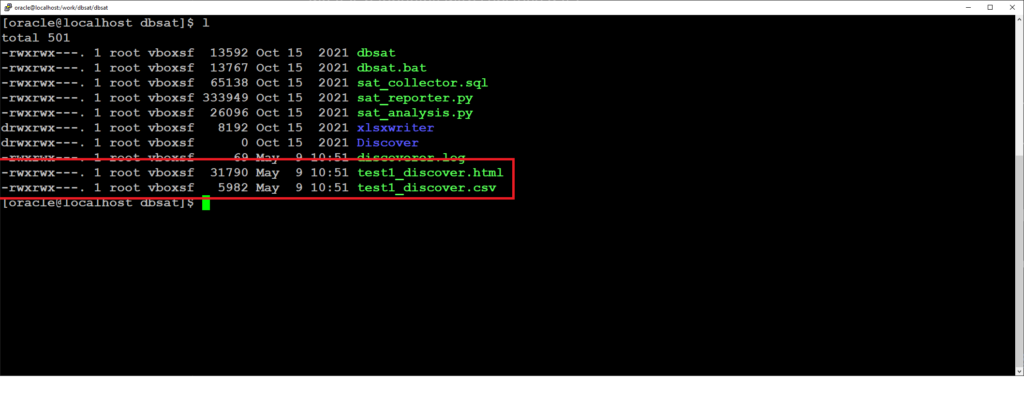

How to Discover sensitive data for loading into AVDF.

Upload sensitive data metadata into Audit Vault

Sensitive data reports

Advantages: